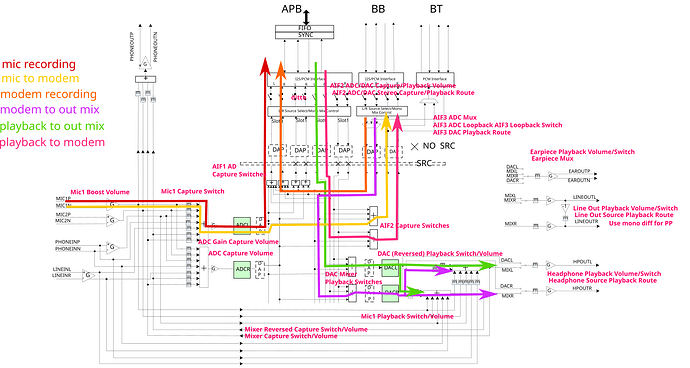

I mean… anything’s possible, I guess. That quote is describing a hardware diagram… specifically, this one:

Which, if it’s labeled accurately, seems to show that the microphone and headphone interfaces are mono paths on a stereo interface, with the Left channel connected to the actual hardware (mic-in to modem, for example, or call audio out from modem to the earpiece/headphones — though that gets split to both channels, on its way to the hardware). The Right channels then hold the paths you’re talking about, like the “playback to modem”.

So the key may be that “L/R source select” notation that all of those audio interfaces have — if you want to play audio out to the modem, instead of having it capture the microphone, the key may not be about routing any audio paths differently, but rather to mute the Left channel on the modem audio interface and unmute the Right channel.

If they can be enabled together, you may even be able to unmute the Right channel and have playback audio sent to the modem, while still being able to talk over the mic. Otherwise, you may need to toggle those “AIF2 Capture Switches” to switch sources, which would (as noted) be done in alsa.

While this is true:

You can dump the list of all controls using alsactl store -f - . You can also set these controls up using alsamixer or by modifying and loading the text file generated by alsactl via alsactl restore -f <path> .

It may be easier to use amixer to manipulate the interfaces, since you can modify them interactively instead of having to edit and load configuration files. (Once you’ve figured out the correct configuration, then you can dump that config to make it loadable.)

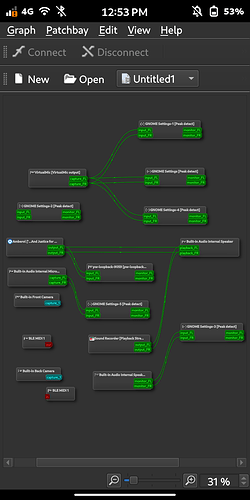

amixer -c 0 contents will spit out all of the controls for card 0 in a compact format that gives you useful information like whether a given control is writable or not. For example, the motherboard audio interface on my desktop looks like this:

$ amixer -c 0 controls

numid=26,iface=CARD,name='Front Headphone Jack'

; type=BOOLEAN,access=r-------,values=1

: values=off

numid=24,iface=CARD,name='Line Jack'

; type=BOOLEAN,access=r-------,values=1

: values=off

numid=25,iface=CARD,name='Line Out Jack'

; type=BOOLEAN,access=r-------,values=1

: values=off

numid=23,iface=CARD,name='Mic Jack'

; type=BOOLEAN,access=r-------,values=1

: values=off

numid=27,iface=CARD,name='Speaker Phantom Jack'

; type=BOOLEAN,access=r-------,values=1

: values=on

numid=22,iface=MIXER,name='Master Playback Switch'

; type=BOOLEAN,access=rw------,values=1

: values=on

numid=21,iface=MIXER,name='Master Playback Volume'

; type=INTEGER,access=rw---R--,values=1,min=0,max=64,step=0

: values=41

| dBscale-min=-64.00dB,step=1.00dB,mute=0

numid=4,iface=MIXER,name='Headphone Playback Switch'

; type=BOOLEAN,access=rw------,values=2

: values=off,off

numid=3,iface=MIXER,name='Headphone Playback Volume'

; type=INTEGER,access=rw---R--,values=2,min=0,max=64,step=0

: values=0,0

| dBscale-min=-64.00dB,step=1.00dB,mute=0

numid=31,iface=MIXER,name='PCM Playback Volume'

; type=INTEGER,access=rw---RW-,values=2,min=0,max=255,step=0

: values=251,251

| dBscale-min=-51.00dB,step=0.20dB,mute=0

numid=20,iface=MIXER,name='Line Boost Volume'

; type=INTEGER,access=rw---R--,values=2,min=0,max=3,step=0

: values=0,0

| dBscale-min=0.00dB,step=10.00dB,mute=0

numid=2,iface=MIXER,name='Line Out Playback Switch'

; type=BOOLEAN,access=rw------,values=2

: values=off,off

numid=1,iface=MIXER,name='Line Out Playback Volume'

; type=INTEGER,access=rw---R--,values=2,min=0,max=64,step=0

: values=0,0

| dBscale-min=-64.00dB,step=1.00dB,mute=0

numid=11,iface=MIXER,name='Line Playback Switch'

; type=BOOLEAN,access=rw------,values=2

: values=off,off

numid=10,iface=MIXER,name='Line Playback Volume'

; type=INTEGER,access=rw---R--,values=2,min=0,max=31,step=0

: values=0,0

| dBscale-min=-34.50dB,step=1.50dB,mute=0

numid=19,iface=MIXER,name='Mic Boost Volume'

; type=INTEGER,access=rw---R--,values=2,min=0,max=3,step=0

: values=0,0

| dBscale-min=0.00dB,step=10.00dB,mute=0

numid=9,iface=MIXER,name='Mic Playback Switch'

; type=BOOLEAN,access=rw------,values=2

: values=off,off

numid=8,iface=MIXER,name='Mic Playback Volume'

; type=INTEGER,access=rw---R--,values=2,min=0,max=31,step=0

: values=0,0

| dBscale-min=-34.50dB,step=1.50dB,mute=0

numid=16,iface=MIXER,name='Capture Switch'

; type=BOOLEAN,access=rw------,values=2

: values=off,off

numid=18,iface=MIXER,name='Capture Switch',index=1

; type=BOOLEAN,access=rw------,values=2

: values=off,off

numid=15,iface=MIXER,name='Capture Volume'

; type=INTEGER,access=rw---R--,values=2,min=0,max=31,step=0

: values=0,0

| dBscale-min=-13.50dB,step=1.50dB,mute=0

numid=17,iface=MIXER,name='Capture Volume',index=1

; type=INTEGER,access=rw---R--,values=2,min=0,max=31,step=0

: values=0,0

| dBscale-min=-13.50dB,step=1.50dB,mute=0

numid=7,iface=MIXER,name='Loopback Mixing'

; type=ENUMERATED,access=rw------,values=1,items=2

; Item #0 'Disabled'

; Item #1 'Enabled'

: values=0

numid=12,iface=MIXER,name='Auto-Mute Mode'

; type=ENUMERATED,access=rw------,values=1,items=3

; Item #0 'Disabled'

; Item #1 'Speaker Only'

; Item #2 'Line Out+Speaker'

: values=0

numid=32,iface=MIXER,name='Digital Capture Volume'

; type=INTEGER,access=rw---RW-,values=2,min=0,max=120,step=0

: values=60,60

| dBscale-min=-30.00dB,step=0.50dB,mute=0

numid=13,iface=MIXER,name='Input Source'

; type=ENUMERATED,access=rw------,values=1,items=2

; Item #0 'Mic'

; Item #1 'Line'

: values=0

numid=14,iface=MIXER,name='Input Source',index=1

; type=ENUMERATED,access=rw------,values=1,items=2

; Item #0 'Mic'

; Item #1 'Line'

: values=0

numid=6,iface=MIXER,name='Speaker Playback Switch'

; type=BOOLEAN,access=rw------,values=2

: values=on,on

numid=5,iface=MIXER,name='Speaker Playback Volume'

; type=INTEGER,access=rw---R--,values=2,min=0,max=64,step=0

: values=64,64

| dBscale-min=-64.00dB,step=1.00dB,mute=0

numid=29,iface=PCM,name='Capture Channel Map'

; type=INTEGER,access=r--v-R--,values=2,min=0,max=36,step=0

: values=0,0

| container

| chmap-fixed=FL,FR

numid=28,iface=PCM,name='Playback Channel Map'

; type=INTEGER,access=r--v-R--,values=2,min=0,max=36,step=0

: values=0,0

| container

| chmap-fixed=FL,FR

numid=30,iface=PCM,name='Capture Channel Map',device=2

; type=INTEGER,access=r--v-R--,values=2,min=0,max=36,step=0

: values=0,0

| container

| chmap-fixed=FL,FR

So I can see the status of 5 different jacks (read-only), I have control over the Master Playback, Headphone Playback, PCM Playback, Line Out Playback, Line Playback, and Mic Playback Volumes, plus a switch to activate/deactivate each. There are Line and Microphone Boost Volume controls, selectors for things like Loopback Mixing, Auto-Mute Mode, and Input Sources, plus capture volume controls. Finally, some channel mappings are presented — again, read-only, as they’re there for informational purposes only.

To change the value of a writable control, you can use amixer -c 0 cset <id> <value>. The <id> can be any sufficiently unique part of the first line of each control listed — so, amixer -c 0 cset iface=MIXER,name='Master Playback Volume' 50% and amixer -c 0 cset numid=21 32 would both set my card’s master volume to 50% (as its raw max is 64).