Thinking about it, I’m not sure whether for things like font rendering there is a raster operation at the end (I mean, when downscaling) or this is automatically accounted by the transformations already set at rendering time, so fonts are accurately rendered at the final scale because both the integer scale factor and the final raster size (I mean, the physical size in screen pixels, I ignore the right term for this) are taken into account by the client itself.

More concretely, here is a not very interesting fragment of code that exemplifies both alternatives. It’s not very interesting because it just upscales by 2 and then downscales by 2. But in one case this is all done directly to the output surface, while in the other case there are two surfaces, the first one is twice the size of the second one, and there is a raster scaling operation between them. In both cases the client renders everything at 2x.

import cairo

width_px, height_px = 300, 100

font_size = 18

text = "Lorem Ipsum Lorem Ipsum"

def render(oversample, output_path):

surface = cairo.ImageSurface(

cairo.FORMAT_RGB24,

(2 if oversample else 1) * width_px,

(2 if oversample else 1) * height_px,

)

context = cairo.Context(surface)

context.scale(

1 / (1 if oversample else 2),

1 / (1 if oversample else 2),

)

context.rectangle(0, 0, 2 * width_px, 2 * height_px)

context.set_source_rgb(1, 1, 1)

context.fill()

context.set_source_rgb(0, 0, 0)

context.select_font_face("Sans", cairo.FONT_SLANT_NORMAL, cairo.FONT_WEIGHT_NORMAL)

context.set_font_size(2 * font_size)

context.move_to(2 * width_px * 0.1, 2 * height_px * 0.5)

context.show_text(text)

if oversample:

surface2 = cairo.ImageSurface(cairo.FORMAT_RGB24, width_px, height_px)

context2 = cairo.Context(surface2)

context2.scale(0.5, 0.5)

context2.set_source_surface(surface)

context2.set_operator(cairo.OPERATOR_SOURCE)

context2.paint()

surface2.write_to_png(output_path)

else:

surface.write_to_png(output_path)

render(True, "scale_over.png")

render(False, "scale.png")

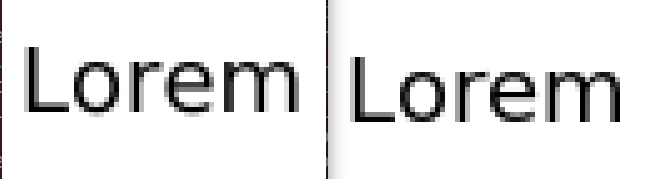

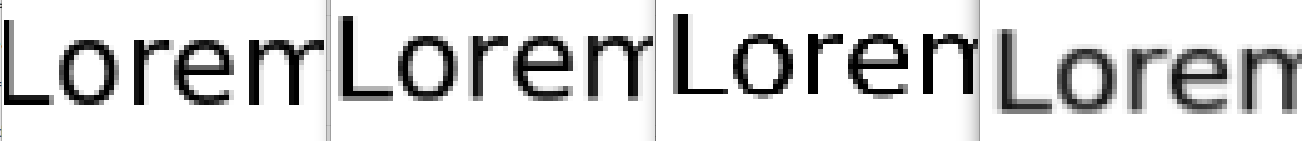

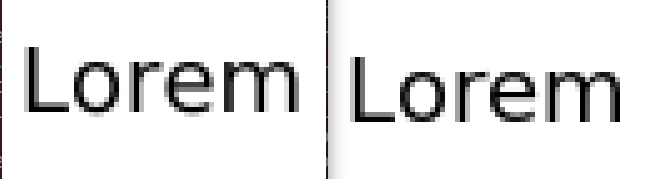

As you can see outputs are rather different (I don’t know which scaling algorithm is Cairo using here, I assume it’s just rounding to the nearest pixel):

The left one has oversample=True, the right one has oversample=False. The right one, of course, is exactly the same as if no scaling would have been applied for this trivial example, so it’s the benchmark.

So what alternative better represents the facts, if any? I know that it sounds as I’m asking the same than at the beginning of this thread, but there is some ambiguity in saying “first upscaling then downscaling” that I would like to eliminate. At first I was assuming that the downscaling would be necessarily distortive, but I’m not so sure this is the case. In my mind clients were rendering each to a surface buffer and those surfaces were then transformed and composed into an output buffer, but maybe those intermediate buffers are “abstracted away” so there are no actual intermediate buffers but just the output buffer, and hence no raster down-scaling at all (as seems to be the case with xrandr). Maybe all this is obvious and was implicit in your answers, sorry if that’s the case, it’s just a new mindset I have to grasp.