However most of the examples use a different technology and notice that Suzanne.js in the performance sample is not rendered with the colors. Yes there is an example of vertex and fragment shaders but it’s done with cubes and the code is quite different to do a technical comparison. Also, if the gthree area is a subclass of the gtk gl area, I would still wonder which is the real problem in my initial question and in my initial app? Maybe the drivers or the OpenGL version? Anything relevant below?

giuliohome@localhost glarea]$ glxinfo | grep "version"

server glx version string: 1.4

client glx version string: 1.4

GLX version: 1.4

Max core profile version: 3.3

Max compat profile version: 3.0

Max GLES1 profile version: 1.1

Max GLES[23] profile version: 3.0

OpenGL core profile version string: 3.3 (Core Profile) Mesa 21.1.7

OpenGL core profile shading language version string: 3.30

OpenGL version string: 3.0 Mesa 21.1.7

OpenGL shading language version string: 1.30

OpenGL ES profile version string: OpenGL ES 3.0 Mesa 21.1.7

OpenGL ES profile shading language version string: OpenGL ES GLSL ES 3.00

I still see a warning that I can’t understand when I run my app:

myglarea:36138): Gdk-WARNING **: 00:49:45.889: OPENGL:

Source: API

Type: Error

Severity: High

Message: GL_INVALID_ENUM in glDrawBuffers(invalid buffer GL_BACK)

It seems to have to do with something internal to the GTK+ mechanism, the buffers swapping?

Edit

Interesting, the above said warning disappears if I login in GNOME Xorg instead of Wayland!

Edit 2

And the warning is fixed also in Wayland with

export GDK_BACKEND=wayland

export GSK_RENDERER=cairo

Indeed it become a message instead of a warning, visible with

export GDK_DEBUG=opengl

and it reads Flushing GLX buffers for drawable… (more details below)

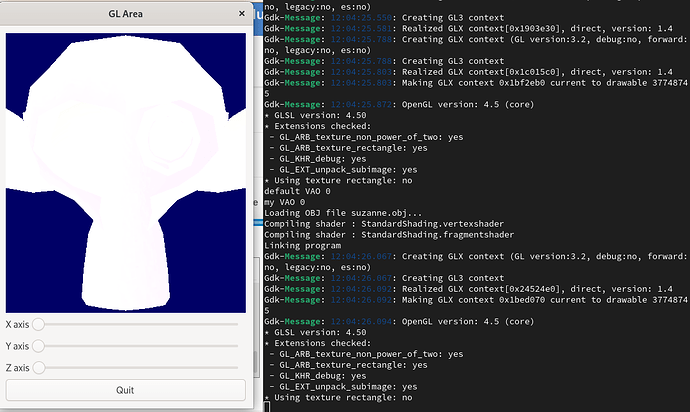

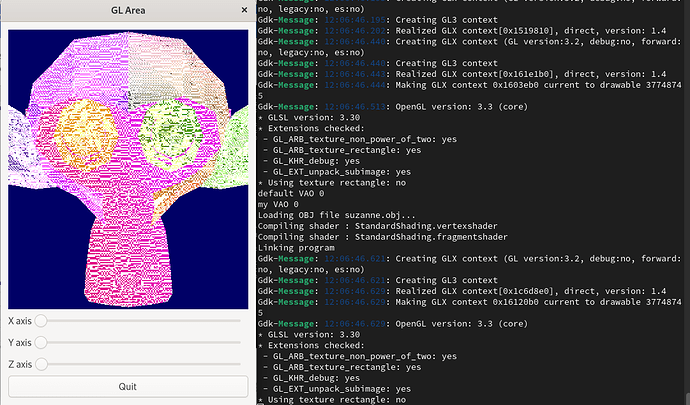

Gdk-Message: 11:41:54.053: OpenGL version: 3.3 (core)

* GLSL version: 3.30

* Extensions checked:

- GL_ARB_texture_non_power_of_two: yes

- GL_ARB_texture_rectangle: yes

- GL_KHR_debug: yes

- GL_EXT_unpack_subimage: yes

* Using texture rectangle: no

default VAO 0

my VAO 0

Loading OBJ file suzanne.obj...

Compiling shader : StandardShading.vertexshader

Compiling shader : StandardShading.fragmentshader

Linking program

Gdk-Message: 11:41:54.075: Making GLX context 0x20c4eb0 current to drawable 31457289

Gdk-Message: 11:41:54.076: Making GLX context 0x20c4eb0 current to drawable 31457294

Gdk-Message: 11:41:54.083: Flushing GLX buffers for drawable 31457294 (window: 31457284), frame sync: no

Anyway even after fixing the warning via Gnome Xorg, the original issue persists.

If I try the suggestion from this gitlab issue, namely Using LIBGL_ALWAYS_SOFTWARE=true to switch to llvmpipe, I see OpenGL versioni 4.5 (core) and now Suzanne renders without colors.