Thanks Sophie, that helps a ton! It’s a perspective I didn’t have and I’m really grateful both for bringing my attention to the existing issues, why they are issues and how local-first apps could help. That’s very useful!

Hi! The point of this project is to introduce a change at the platform level indeed, so app developers targeting the GNOME platform can take advantage of it.

Thank you for bringing all these organisations and how they could benefit from it. That’s a valuable list to keep at hand for future collaboration in this field. With that said, I believe we should not be too carried away, start small, and deliver results early to help people early on and encourage donors to keep going with us ![]()

How realistic is it to do something like this on p2p between Gnome hosts? I think this is a perspective look at the future of Gnome and a step towards developing our own ecosystem. Gnome is becoming more convenient and popular every day, but the lack of an ecosystem can be a problem for many sections of society, because you have to invent your own solutions or use third-party services. Yes, Gnome doesn’t have a big commercial parent company at its core, but maybe that could lead us to more modern solutions? Perhaps p2p technologies will allow us to create a self-sufficient ecosystem, first to satisfy the problem of synchronizing settings and restoring the basic environment, and then for a wider range of connected tasks, up to the interaction between devices in a distributed network through the API offered by the environment.

Of course, this should work simply and out of the box, and with a convenient permission system for third-party programs. If the system keeps my configuration up to date even with the transition to a new device, this will already be a big step towards a new experience. This could be a new security foundation to access a lost remote device when connected to a network, or do something about sensitive data when stolen. For example, we can store sensitive data encrypted even locally, and allow access to it only after the user has been verified in a decentralized network.

My point is that perhaps we should think about a secure ecosystem, at least for critical data and configuration data. This will allow Gnome to combine the best of both worlds, in the ability to work both online and offline, and at the same time get rid of (at least partially) from centralized servers. However, there are many things to consider in the architecture, such as security, reliability, simplicity, scalability, and modularity.

I’ve been thinking about how to get to a future where all your personal devices magically stay in sync with each other without any central service, no matter if that service is a cloud, a hosted server, a local server or just one device.

For example the model in bonsai still requires a central service and it brings with it all the failure modes of a cloud except that you’re now responsible for your cloud.

Looking at this issue naively is a bit of an issue. There already are people in this thread who want a system which synchronizes files but as you will hopefully see that’s a horrible idea.

Assume that we have three devices A, B and C. We don’t want a central service which means that each device can make changes independently of any other device so we will end up with state Sa on device A, Sb on device B and so on.

In a system with a central service this doesn’t happen. Every device is in the same state but it requires the central service to be available for any device to make changes. If you can’t reach the google servers you can’t make changes to your google docs.

So how do we solve that issue without a central service? Can we just take Sa and sent it to all other devices? Sure, that’s possible but you loose the changes made to Sb and Sc. Typically systems which synchronize files just ask the user which of the states they want to keep. You still loose changes but at least you can choose what you want to loose. This is what’s called a conflict and choosing one state is a conflict resolution strategy.

As a user, what we really want is some magic which takes Sa Sb and Sc and gives us a state Sabc which contains all the changes of all states. How exactly does this new state look like? Well, it depends on the application. For example consider a text-editor where you delete a word on one device and change the same word on another device: what’s the correct combined state? There isn’t an obviously correct answer to the question.

Either way, we know how to construct data types in such a way that if all nodes see all the changes made on all other nodes, the resulting state is the same on all nodes, and that no matter which changes any node sees, the state is a valid state. These data types are called CRDTs and they are amazing!

I believe that CRDTs are the only sensible choice for any application synchronization which doesn’t want to require central services. CRDTs are unfortunately something that has to be implemented in each app specifically because the choice of CRDTs depends on the specific data model of the app.

CRDTs require a network layer which provides Reliable Causal Broadcast and to reach the goal of not relying on central services the system has to work with p2p connections.

The first issue with p2p connections is that they require some sort of bootstrapping so that nodes can find each other. In the beginning this can be a central service but there is distributed ways to achieve the same, using DHTs or Tor.

The second problem with p2p connections is NATs. This is an unsolvable, best-effort problem. You can hole-punch through a lot of NATs using UDP but there is NATs where that will not succeed and you either give up or use a proxy to still get a connection going.

Other issues then are encryption and making the broadcasting reliable and causal.

Realistically it would be good to use leverage projects which already deal with all this complexity like libp2p.

So, enough of the technicalities of how to implement all of this. How would the future look like from a users perspective?

There should be a new settings panel for devices. You should be able to enroll a device into your personal devices group either via QR code or with some temporary key/code.

Applications should then synchronize their data to all the devices in the personal devices group. Tabs in the browser, settings, etc all should be synchronized. A music player should sync the metadata, ratings, etc but not the actual music. A markdown editor should synchronize the whole documents.

In the even more distant future you should also be able to add other people to the system with which you then can collaborate on e.g. a document.

There is a lot more to all of this but it’s getting too long already.

tl;dr: Applications implement everything they want to sync with CRDTs (we can build a library for that). The system has to provide a network layer to send messages between devices.

All of this is doable today. It just needs someone doing it.

Indeed, one of the ideas behind this whole project is to develop an ecosystem and a platform developers can target to create local-first applications that can stay in sync.

Thanks for bringing these technical considerations to the brainstorming! Those are indeed challenges some of us are familiar with and we keep in mind. CRDTs are certainly one of the solutions we consider… but we’re not here yet!

At the moment, we are building the strategy to target populations who either have no access to the Internet, limited access, or untrusted access (e.g. in countries where governments can be snooping on what people are doing). The next step is to conduct a user study to have a better idea of what are the actual problems and needs these people have.

Once we have a good vision on their functional needs, we can take a step back and think of a solution that solves their problems and is extensible for future milestones, get designers to come up with a user friendly way of doing it, and finally get it implemented.

More to come on this initiative (hopefully) shortly ![]()

-

I really like the term “local first”.

-

It’s important to focus on specific app use-cases, one at a time. Generic tools like the old Conduit are not a great end-user experience.

Also, quick nod to a free software app already doing this: Joplin Notes, which can sync notes via various providers (Dropbox, Nextcloud, S3, WebDAV, an NFS mount… or their own “Joplin Cloud” server), with end-to-end encryption.

I really like the term “local first”.

As far as I know “local first” is an existing term and was not just made up for this proposal, but this was the first time I heard about it. To me it doesn’t seem to do a good job of communicating what it is about to people not already familiar with the term and not willing to read beyond headlines. When I first read “local first” my initial assumption was that this is about applications that keep data local to the machine they are running on and maybe add syncing as an afterthought or not at all. This is quite the opposite of what this is about.

I would prefer some term that let’s people know that this is about a specific way of syncing rather than making syncing seem like like something that is not part of the target features.

TLDR: local-first is fundamental. Peer-to-peer synchronization is a last resort for situations without internet access. With full or degraded internet access, synchronization with decentralized services is important (preferably hosted by small human-scale organizations).

Having local-first built applications is the insurrance that people can still access their data in a world without (at least permanent) internet access. I think indeed that this is a fundamental principle.

In the past years I have spoken with politicaly engaged people and activists, in a world with no lack of internet access (yet), but no one directly mentionned local-first applications.

The need that they expressed to me was to have efficient collaboration tools in which they could trust.

- The key word here is the collaboration, because this mostly what activists do: they organize things, they communicate.

- Some of them feared for their privacy, some of them are geeks but the most part is people without real interest in technology, or even technophobia. Their tools needs to be efficient, because nowadays a lot of political communication and collaboration is done through Facebook groups, whatsapp, Google Drive or Google Calendar. A decade after Snowden, activists still don’t know better than tech giant centralized, untrustworthy but user-friendly, services to organize themselves.

- They need to trust their tools, because some of them told me that they feared to use tech giant tools. In the end the sometimes work unplugged (IRL talk, handwritten communication etc.), this is safer but way more inefficient.

Local-first is a fundamental principle, but synchronization must not be forgotten. The former has a lot less value without the later, as synchronization brings collaboration, and thus usage.

Sychronization, but to who?

In a world without internet, easy peer-to-peer data exchange from one persone to another might be a good thing to have - I think of serverless synchronization through a local network or with bluetooth - though I never met someone who confirmed me this need.

In a world with permanent or even intermitent internet access, the peer-to-peer synchronization approach sounds unrealistic to me.

Serverless synchronization from device to device is cumbersome when you want to communicate with a group of person. Local political groups involve dozens of persons, this is not always realistic, nor safe, to gather everybody in the same room so you can exchange data with them.

Acvitists may not have the time or interest to learn the self-hosting skills, as they are usually pretty busy doing activism.

Multiplying the devices does not sound ecological nor resource efficient neither (for example, if everyone hosts their own raspberry-pi with yunohost and a mail stack).

If centralization and distribution are not the better paradigms for cooperation, this lets decentralization.

Small human-scale organizations hosting services for a community of a few hundred people seems a good compromise IMHO.

I think of something like the CHATONS initiative from Framasoft tries to encourage.

Organizations this size would be large enough so people can mutualize the costs of the infrastructure and the maintainance, and not everybody needs to be an expert.

Though they would be small enough so users can talk, meet and trust the people who are hosting their data.

From a technical point of view, tackling the problem from this side (instead of serverless synchronization) has the benefit that there is already a lot of existing standards to use (IMAP, SMTP, WebDAV, CalDAV, CardDAV, OIDC etc.).

I would love GNOME to encourage the development of human-size hosting organizations by supporting and developping open synchronization standards.

I would love GNOME to encourage the development of human-size hosting organizations by supporting and developping open synchronization standards.

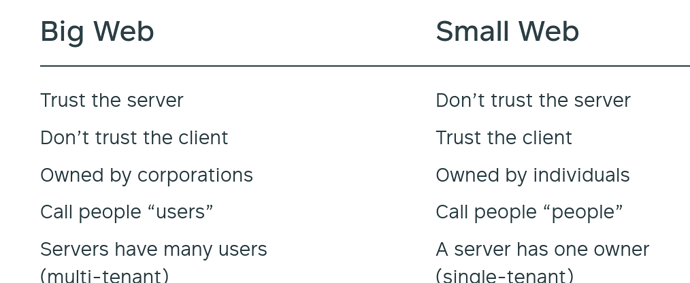

This sentence reminded me of another existing player here: the Small Technology foundation, who have a concept of “Small Web”. The focus is on web rather than desktop, but the goals are very much overlapping:

“local first” is a term coined by web developers where it makes a lot more sense than in the context of an operating system. Instead of storing app state on a server, local first apps store it locally ( localStorage) and then replicate that state to other clients with the use of CRDTs.

It’s not only about syncing though but also about collaboration. I agree that “local first” is not the best term either way.

I am so against this. Any kind of hosting requires new machines, resources to keep the machines operating and makes a central point of failure. People have tried this over and over without any success. Requiring extra hardware fails, requiring people to spend money on a service fails.

The last ~5 years people have developed the technology to make this device to device synchronization and collaboration without central services and extra hardware possible. Not using this would be a huge mistake.

Note that I am not against the peer-to-peer synchronization principle. As I said it is great to have when internet access is broken. However I do not understand how it would scale to match the usage people have today, with abundant internet access and numerous online social interactions.

I may not have understood the whole picture though, so I am interested to hear fictional user stories where a large group exchange data without any kind of hosting.

Say one need to share a document with a dozen people around the country. How do you find on the internet the peers you want to synchronize your data with, without registering them on any kind of hosted service? Should you meet them IRL instead?

Once you have a completely decentralized system it’s easy to add back some degree of centralization.

For example if you have two devices which will never be able to connect directly to each other, maybe because they are never turned on at the same time or because the firewalls between them prevent this kind of access, you can introduce a third node which acts as a proxy-buffer for messages.

If you have a group of hundreds of people the bandwidth becomes a problem if every change as to be sent to every other peer. With a suitable network and encryption layer it’s possible to add nodes which are responsible for fan-out on behalf of clients to reduce their bandwidth.

All of those extra nodes could be your own devices, your peers devices or a third party service. Starting with the decentralized p2p approach makes you way more flexible and also allows to give up some degree of decentralization. The other way is either impossible or at least much harder.

That’s also a problem with hosted services. You need some kind of identifier for your peers and share that somehow. Granted, p2p systems often have identifiers which are not really usable for humans but this is just another example where introducing some degree of centralization could improve usability.

Gosh, this has been quite heated. To cool things down, may I talk about something?

You know, honestly. There is an absence of local first and open source apps in proprietary platforms like Windows or Android.

The ecosystem there are dominated by commercial apps that usually well, heavily influenced by cloud-first thingies, require online accounts where it doesn’t really make sense to have them, have poor privacy practices and well, all that good stuff.

On Android, it’s even worse. Try search for “QR Code Scanner” or “Whatsapp Status Downloader”, most apps them are proprietary and even sketchy!

I wonder if the GNOME project and the foundation would like to promote an open source and local-first ecosystem in Windows and Android. Our Circle or core apps are too focused on Linux at the moment, and GDK hasn’t been ported to Android yet.

I think this is really important since I’m pretty sure most journalists out there still use Windows for their stuff.

As a platform developer and an app developer: I don’t use Windows, or Android. I mainly care about GNOME, and convincing people to move to GNOME. The GNOME ecosystem and platform gives me the tools to make good applications; and missing features/functionality in the platform is usually what pushes me to work on the platform itself. People enjoy saying that running GNOME apps on Windows/macOS will encourage people to migrate to GNOME, which fails to account for three things:

- porting is hard. It requires a lot of investment, and a lot of effort to ensure things keep working. We have a better story for Windows and macOS support in GTK4, but it’s always 5 minutes away from being undone because the amount of people who understand GTK and understand how to write a toolkit on non-Linux platforms is impressively small. We can only document GTK, but we can’t make people that understand Windows as a platform to contribute to the Windows support in GTK.

- people don’t use free software apps anyway, unless they are getting screwed by companies that have started moving towards rentier economic models, with apps-as-a-service

- people won’t migrate their OS just because their applications also work on that OS; it’s a basic economic issue: why should they install a whole new OS if the application works the same on their current one? If an artist is using the GNU Image Manipulation Program, for example, they won’t only use GIMP all day, every day; they will also use their OS for other things, like chatting with friends, or playing games. Unless we have good support for those activities, porting applications won’t magically push people to using GNOME in the future, which means we don’t get more users for the platform that is the driving force of those applications.

We should have better applications; a better, more integrated environment; in short: we need to provide an incentive. Incidentally: no, software freedom is not enough of an incentive. Software freedom is good enough if you’re a programmer, and you have the tools to take advantage of the four freedoms; if you aren’t, it’s at most a useless feature.

Currently, the only thing that could push people to move to GNOME is corporations messing up their OS and applications; we need to get out more, and give people a reason to switch.

As a platform developer, I am also going to add: if you are missing a GDK backend for Android, please feel free to work on it. Assuming it’s even possible, given the nature of the Android platform. Same for iOS. The GNOME Foundation isn’t involved in porting GTK to other platforms: that’s the result of volunteers working within the project.

I understand that, I think it’s fine. You don’t have to care much about targeting platforms outside of Linux.

I can look for other people that would like to volunteer.

I’m looking forward to do that. Though not now since I have exams next week. ![]()

I think we need to get rid of our Linux evangelism here. ![]()

As the GNOME Project is non-profit, I think it will be counter-intuitive to intentionally push people into using our platform. It just feels like we’re looking to get as much money from GNOME the desktop environment.

As laid down by this discussion, we’re looking to protect people’s privacy especially the privacy of important groups of people: journalists, security researchees, and marginalized groups.

Of course, it’s only a small percentage of them that are tech literated enough to install Linux. Even with security researchers, many of them daily drive Windows, or at least, Ubuntu. And I think we really should focus on saving them from the Windows ecosystem than making them switch to Linux, which of course they’ll refuse.

I think the folks at KDE did a really well job with Krita. Lots of non-tech savvy artists use Krita in Windows professionally, and should they wish to switch to Linux, their tools are already available nicely.

I think exclusivity as a mean to attract people to a product is toxic for consumers. While it forces people to use the thing a product is exclusive to, it will make them hate the thing, which is not really what we’re looking as a donation-dependent foundation. ![]()

Just by way of a some interesting links, I met with Martin Kleppmann and learnt about the automerge CRTD library (Rust, yay) he is working on with others, and their work on defining the properties of what makes an effective local-first application and the benefits to users and developers of apps working in this way. At the moment automerge would need to be paired with something that handles storage and discovering network peers, but GNOME has pieces in its stack (and/or could work with upcoming storage / server / etc work in the automerge roadmap) that could make it a relatively easy lift to use automerge for some apps. This doesn’t neatly answer @thibaultamartin’s use case question but it’s an interesting technical direction.

If you go forward with this, you should also try to organize an online protest against the current wave of anti-encryption efforts, which, if successful, will almost certainly lead to client-side file scanning being included in all new devices, obliterating online privacy totally.

If they can view your data as soon as you connect to the Internet, it doesn’t matter if you’re using a local-first app or not; and it’s a given that many of the folks you wish to protect can’t afford a separate device that can be kept completely offline.

And even keeping devices offline may become impossible in the future, as politicians may demand that all new hardware be connected to a surveillance server at all times in order to function. This may seem far-fetched now, but cellular and satellite coverage is fast expanding…

Actually, I find it strange that such a protest hasn’t already begun; where has our fighting spirit gone? Remember how the whole Internet erupted in outrage over SOPA, with some even marching to the streets? I miss those hopeful days…

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.